Robin Healthcare

B2B: Network Validation Tool

Project overview

The product team was tasked with creating a specialized network testing tool for implementation teams and physician office administrators. This tool needed to verify secure network information and ensure sufficient bandwidth for Robin medical devices. The solution would replace a manual, time-consuming process with an automated system to provide comprehensive network health assessments.

The challenge

Implementation teams were traveling to each medical office location to manually test network connections. They recorded only basic speed results at each location within the office, which was:

- Time-consuming and inefficient

- Provided incomplete network health data

- Created risk of suboptimal experiences for new Robin clients

- Required specialized technical knowledge that office staff often lacked

UX Research Approach

A thorough discovery phase was essential to understand the needs of multiple user types with varying technical abilities. The research strategy included:

1. Stakeholder Interviews

- Conducted in-depth interviews with implementation team members to understand their current process, pain points, and technical requirements

- Interviewed medical office administrators to understand their technical capabilities and concerns

- Aligned with product and engineering teams to establish technical constraints and business objectives

2. Contextual Inquiry

- Shadowed implementation teams during actual network tests to observe their workflow

- Documented environmental constraints in medical offices (limited space, time pressures)

- Identified opportunity areas where automation could provide the most value

3. Competitive Analysis

- Examined existing network testing tools to identify strengths and weaknesses

- Analyzed why current solutions weren’t meeting our specific needs

- Identified potential design patterns that could be adapted for our context

4. Surveys and Market Research

- Distributed surveys to gather quantitative data about network testing needs

- Reviewed industry standards for network requirements in healthcare settings

- Collected requirements for different Robin medical devices

Key Research Insights

The research revealed several critical insights:

- Technical Knowledge Gap: Wide disparity in technical knowledge between implementation specialists and office administrators

- Time Pressure: Limited time windows for testing in busy medical offices

- Data Continuity Issues: No standardized format for recording or comparing test results across locations

- Result Interpretation: Difficulty translating raw network metrics into actionable insights

- Maintenance Concerns: Need for ongoing network monitoring beyond initial implementation

Persona Development and Empathy Mapping

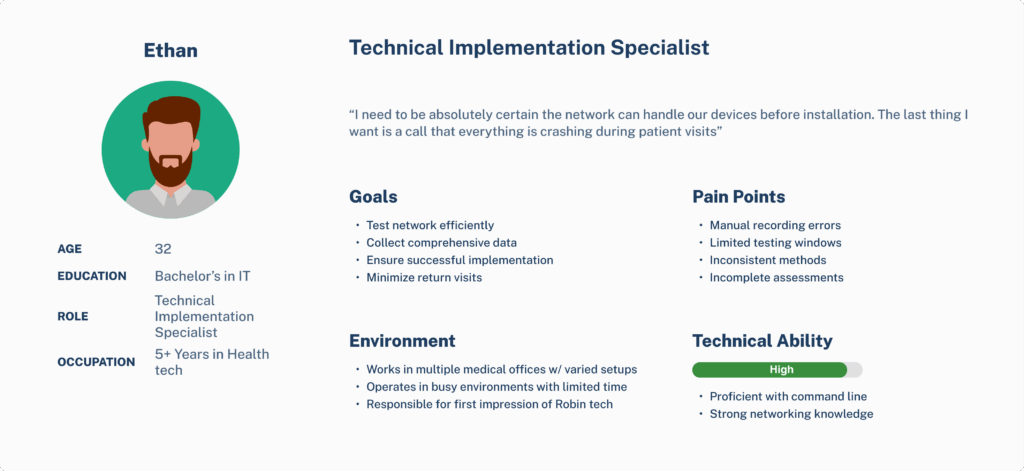

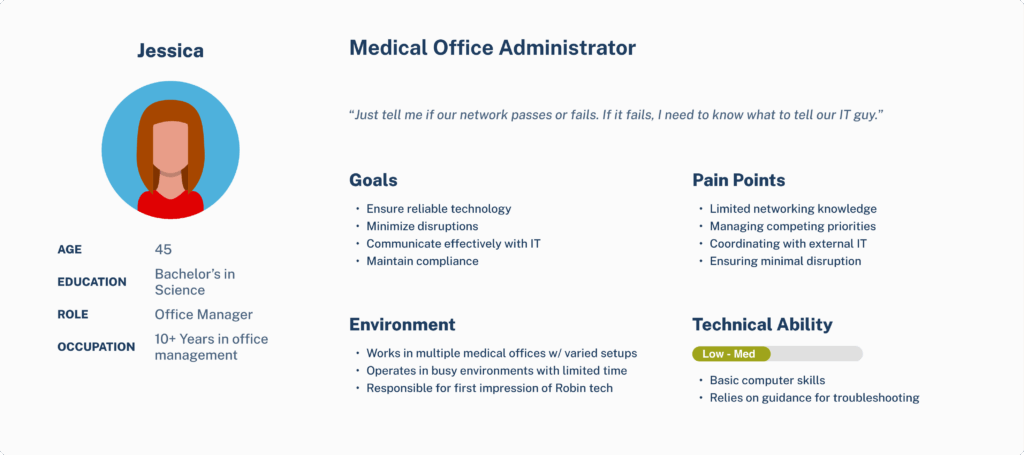

Based on the research data, we created detailed personas:

Primary Personas:

- Technical Implementation Specialist

- High technical knowledge

- Focused on efficiency and comprehensive data

- Needs detailed diagnostic information

- Often working under time constraints in unfamiliar environments

- Medical Office Administrator

- Variable technical knowledge (often limited)

- Concerned primarily with “pass/fail” results

- Needs clear guidance and simple language

- Responsible for communicating issues to IT or leadership

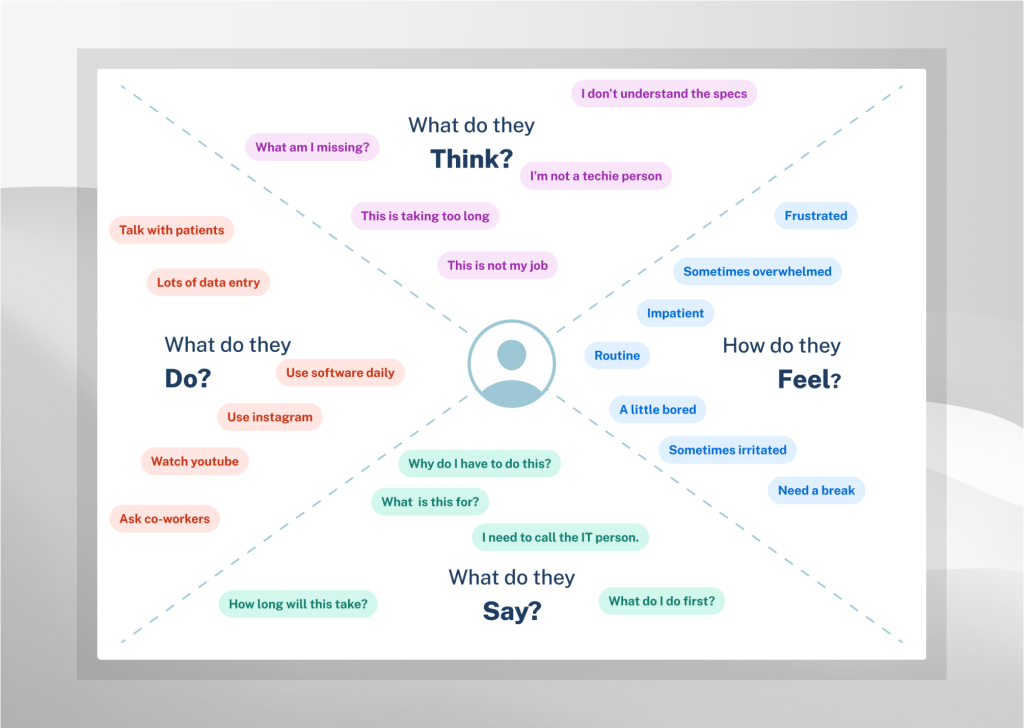

Empathy Mapping

For each persona, we developed comprehensive empathy maps covering:

- What they say, think, feel, and do during the network testing process

- Their pain points and gain opportunities

- Their goals and fears

- Environmental factors affecting their experience

These empathy maps helped ensure our design decisions addressed emotional needs alongside functional requirements, creating a foundation for user journeys aligned with business goals.

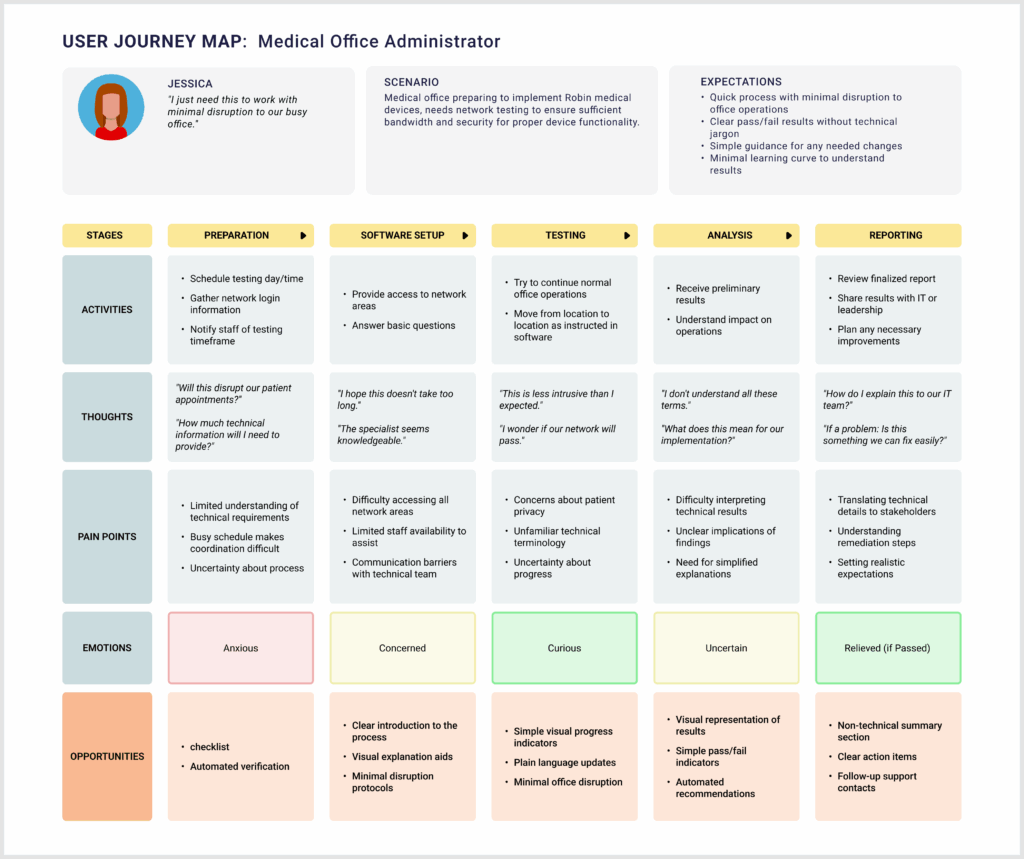

Journey Mapping

For each persona, we mapped the complete journey from preparation to completion:

- Pre-testing preparation

- Gathering network requirements

- Scheduling testing windows

- Coordinating with office staff

- Arrival and setup

- Locating testing points

- Setting up equipment

- Explaining the process to office staff

- Testing execution

- Running multiple test types

- Moving between locations

- Documenting results

- Results analysis

- Interpreting test data

- Identifying potential issues

- Making recommendations

- Follow-up actions

- Sharing reports with stakeholders

- Planning remediation if needed

- Scheduling follow-up testing

The journey maps highlighted key moments of friction, particularly in the testing execution and results analysis phases, which became primary focus areas for our solution.

User Stories and Acceptance Criteria

We developed detailed user stories following the format: “As a [persona], I want to [action] so that [benefit].”

Examples included:

- “As an implementation specialist, I want to automatically run a complete network assessment at each location so that I can collect consistent, comprehensive data without manual effort.”

- “As an office administrator, I want to understand whether my network meets Robin’s requirements without technical jargon so that I can communicate effectively with our IT team.”

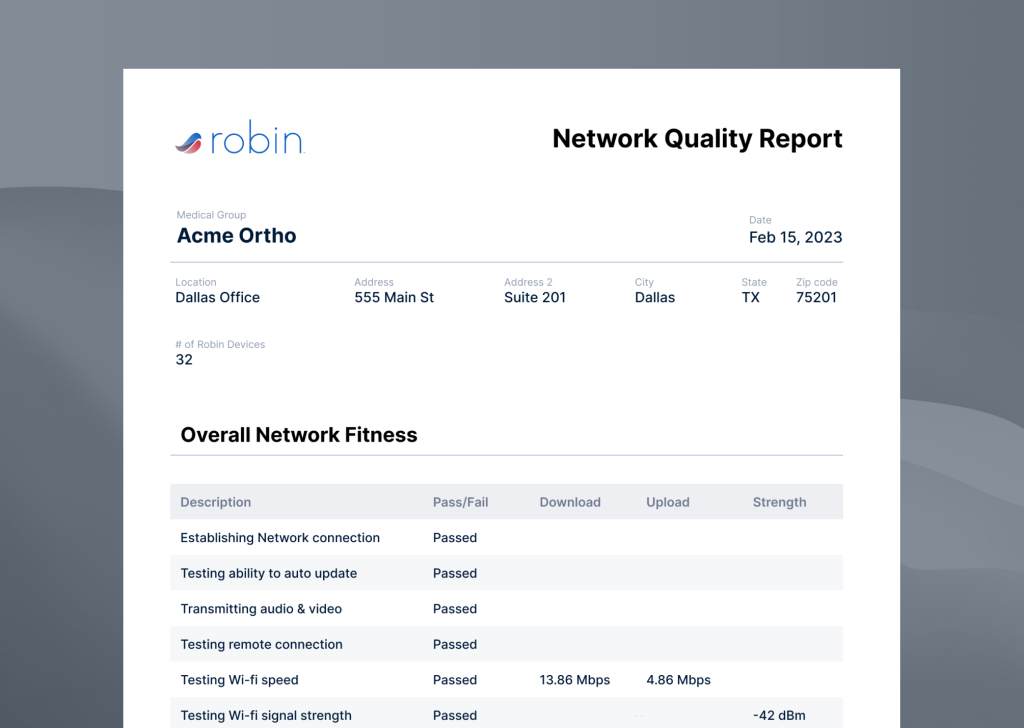

- “As an implementation specialist, I want to generate a standardized report of test results so that I can share objective findings with the client and our internal teams.”

Each user story included clear acceptance criteria defining what successful implementation would look like.

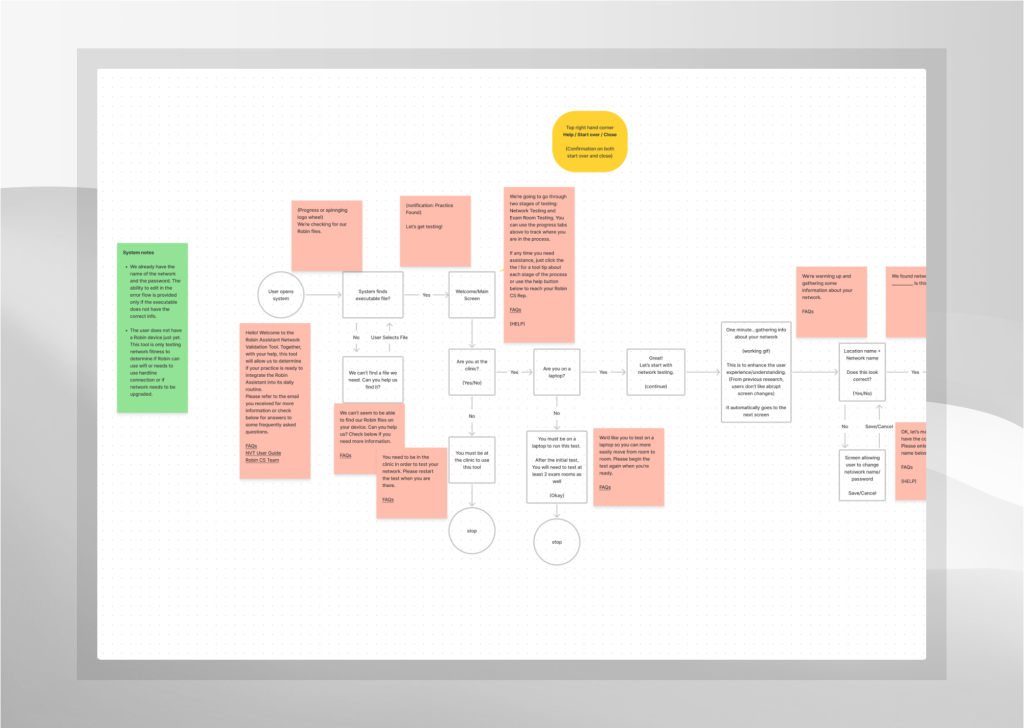

User Flows and Information Architecture

We created detailed user flows showing each step in the testing process:

- Application Launch Flow

- Software initialization

- User authentication

- Test location selection

- Test Configuration Flow

- Test type selection

- Parameter customization options

- Saving test profiles for future use

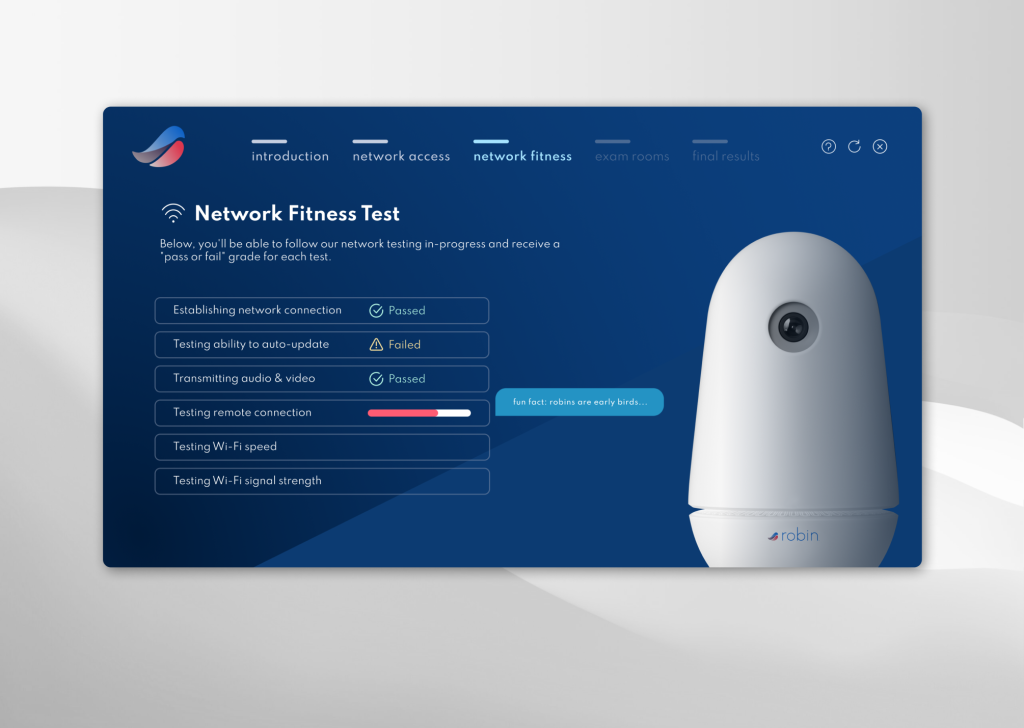

- Testing Execution Flow

- Sequential test execution

- Real-time progress indicators

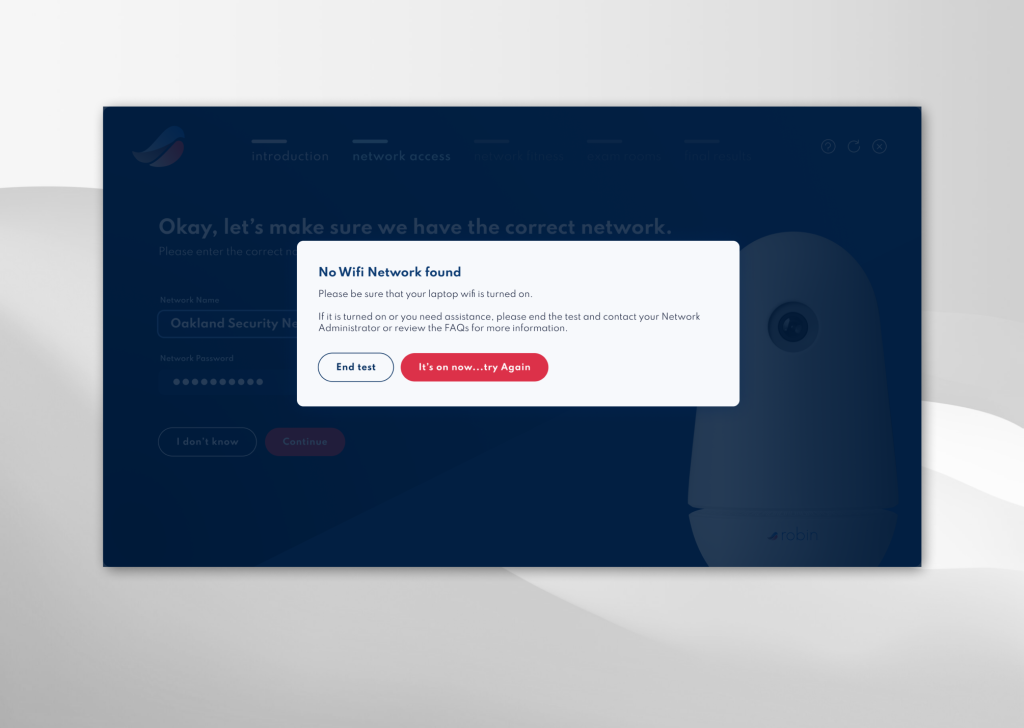

- Error handling and recovery paths

- Results Review Flow

- Data visualization options

- Comparison against requirements

- Pass/fail indicators for non-technical users

- Reporting Flow

- Report generation

- Sharing options

- Data storage and retrieval

Initial Copy Development

Working with copywriters, we focused on:

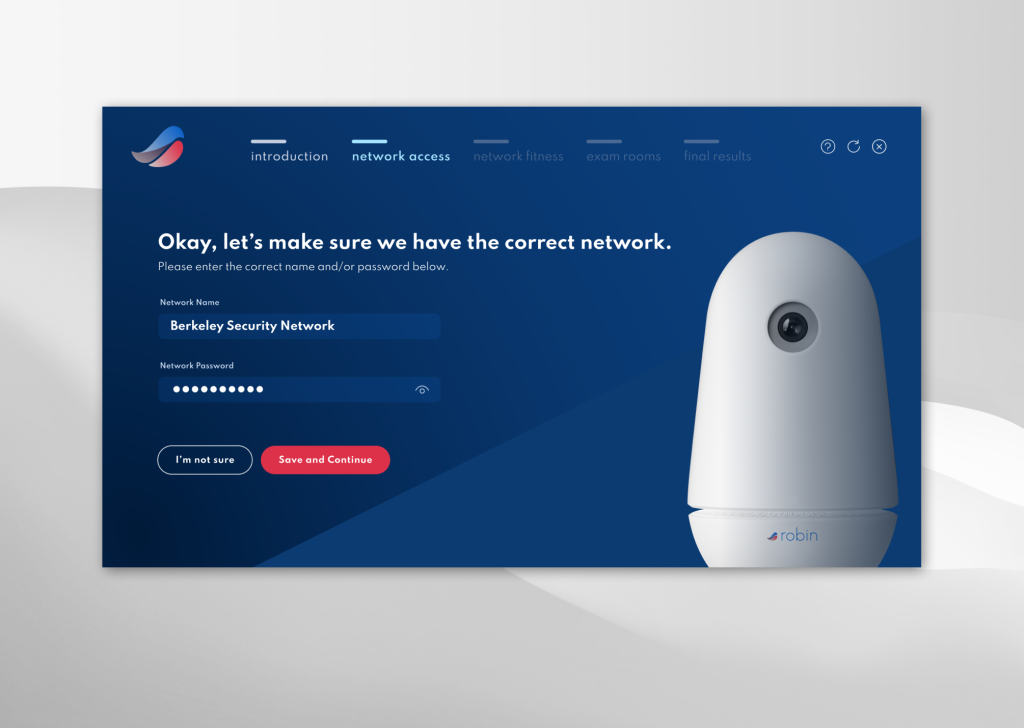

- Simplifying technical language for the office administrator persona

- Creating clear, actionable error messages

- Developing help text and tooltips for complex functions

- Establishing a consistent voice that balanced technical precision with accessibility

The copywriting process revealed several gaps in our initial flows, particularly around error handling and edge cases, which we addressed before moving to wireframing.

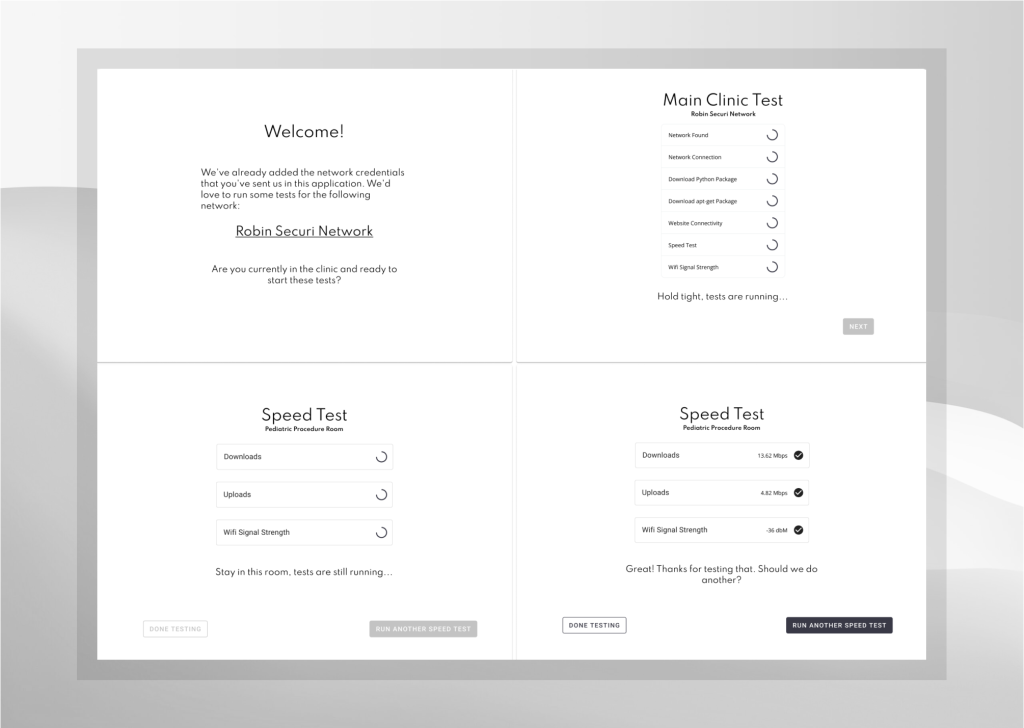

Wireframing and Prototyping

Based on the refined flows, we developed wireframes for key screens:

- Dashboard and Navigation

- Clear entry points for different test types

- Status indicators for completed tests

- Quick access to saved results

- Test Configuration Screens

- Simplified controls for basic users

- Advanced options for technical users

- Preset configurations for common scenarios

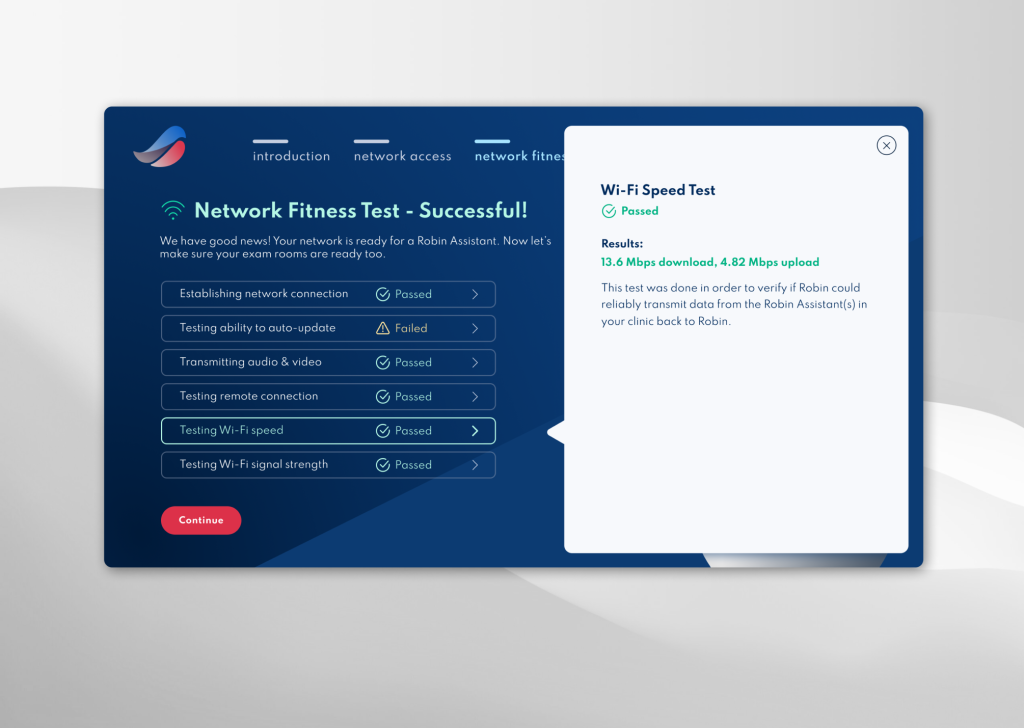

- Testing Execution Screens

- Real-time visualization of test progress

- Clear success/failure indicators

- Contextual help for troubleshooting

- Results and Reporting

- Visual representations of key metrics

- Comparative analysis against requirements

- One-click report generation

These wireframes were then combined into an interactive prototype for initial validation with stakeholders.

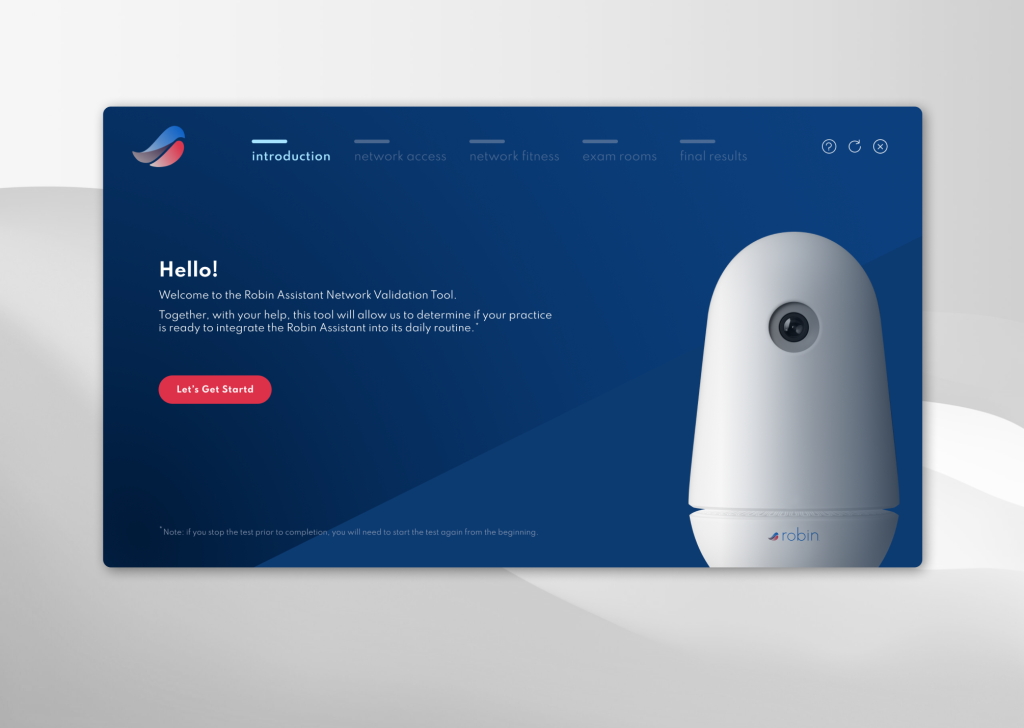

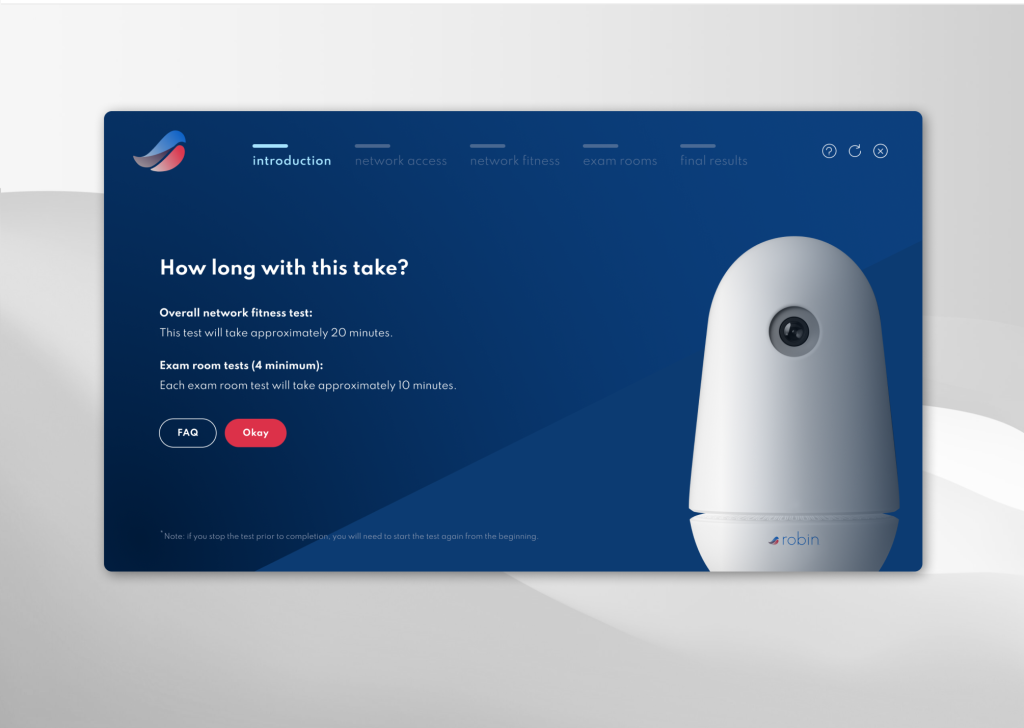

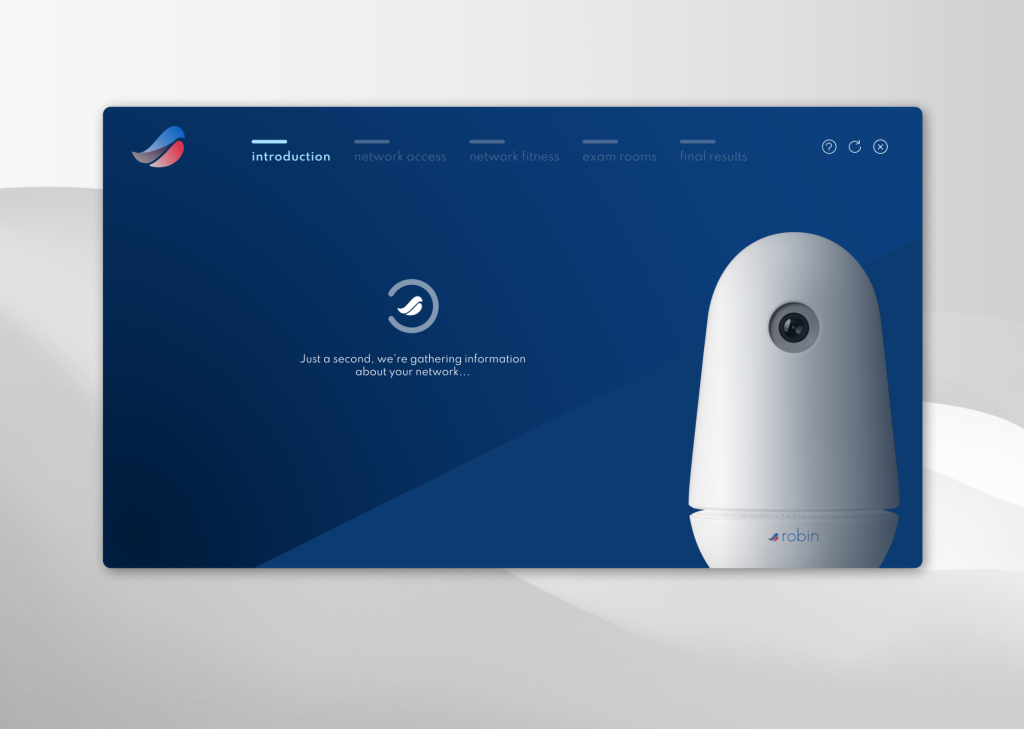

High-Fidelity Design

Due to project time constraints, we moved directly from wireframing to high-fidelity design, focusing on:

- Creating a visual language consistent with the Robin brand

- Developing clear state indicators (success, warning, error)

- Designing data visualizations that communicated complex information simply

- Ensuring accessibility standards were met for all users

The design system included specifications for over 65 screens, including various states (loading, error, success) and modals for each primary function.

Usability Testing

Once the engineering team delivered the MVP, we conducted comprehensive usability testing:

- Test Methodology

- Recruited participants representing both primary personas

- Created realistic test scenarios based on common use cases

- Observed both remote and in-person testing sessions

- Key Testing Metrics

- Task completion rates

- Time-on-task measurements

- Error frequencies

- Subjective satisfaction ratings

- Findings and Insights

- Users successfully completed basic testing workflows

- Office administrators struggled with interpreting some results

- Implementation specialists wanted more detailed diagnostic data

- The JSON configuration method proved cumbersome for all users

- Report printing functionality was highly valued but incomplete

MVP Launch and Iteration

After addressing critical issues identified in testing, we launched the MVP with a plan for continuous improvement:

- Initial Release

- Core testing functionality for implementation teams

- Basic reporting capabilities

- Simple pass/fail indicators for office administrators

- First Iteration Improvements

- Replaced JSON configuration with a cloud-based solution

- Enhanced report generation with printable formats

- Added historical comparison features

- Ongoing Development

- Location-specific reference data

- Automated recommendations for network improvements

- Integration with Robin device management systems

- Self-service testing capabilities for office administrators

Results and Impact

The network testing tool delivered significant improvements:

- Efficiency Gains

- Reduced testing time by 70% per location

- Eliminated manual documentation needs

- Standardized testing procedures across all implementation teams

- Data Quality Improvements

- Comprehensive network health assessment beyond basic speed tests

- Consistent metrics across all installations

- Historical tracking of performance changes

- User Experience Benefits

- Clearer communication of requirements to clients

- Reduced technical knowledge barriers

- Improved confidence in network readiness before device installation

- Business Impact

- Higher client satisfaction ratings during implementation

- Fewer post-installation network-related support tickets

- More accurate resource allocation for implementation teams

This project demonstrated how a structured UX workflow—from research through testing and iteration—can transform a manual, technical process into an efficient, user-friendly solution that serves multiple user types with varying needs and technical abilities.